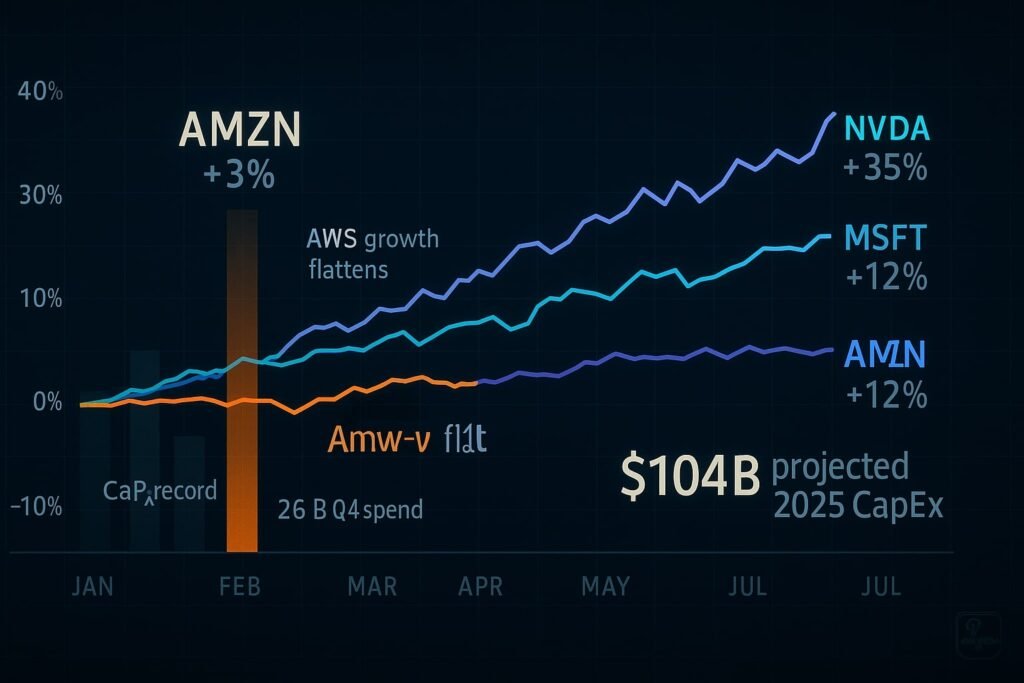

Amazon is on track to spend a staggering $104 billion in 2025 on capital expenditures—more than any other S&P 500 tech giant—to fuel its AI and cloud ambitions. Yet despite this record-breaking spending, shareholders haven’t rewarded Amazon’s stock: it’s barely budged, up only ~3% year-to-date, significantly lagging peers like Meta and Nvidia. So, why is the payoff still a question mark—and how could it flip fast?

- 1. Where Is the $104 Billion Going? The Anatomy of Amazon’s Mega‑Spending

- 2. Why the Stock Isn’t Celebrating—Yet

- 3. The Payoff Channels That Could Flip the Script

- 4. Inference Is the New Oil—And Amazon Wants the Pipeline

- 5. Amazon’s Hidden AI Edge: Retail and Corporate Automation

- 6. Why the Payoff Still Isn’t Guaranteed

- Conclusion: A Long Bet on Infrastructure Power

1. Where Is the $104 Billion Going? The Anatomy of Amazon’s Mega‑Spending

a. Cloud and AI data centers

Roughly 90% of Amazon’s capex—about $90 billion—is headed into AWS data centers, with most of that specifically earmarked for AI infrastructure. In Q4 alone, capex hit $26.3 billion, setting a pace that points to a full-year spend of around $105 billion.

b. Custom silicon: Trainium2 (and soon Trainium3)

Alongside commodity GPUs, Amazon is building its own chips. The newly launched Trainium2 is central to lowering inference costs, while Trainium3 is slated to launch later this year. The plan is straightforward: rely less on Nvidia and more on vertically integrated hardware optimized for AWS services.

c. Robotics and automation in retail

About 25% of capex goes into physical automation—warehouses, logistics, drone and robot delivery systems. The company is even testing humanoid robots via indoor obstacle courses that mimic delivery conditions before real-world deployment.

2. Why the Stock Isn’t Celebrating—Yet

a. Slower-than-expected AWS growth

AWS continues to post solid high-teens growth, but that’s slower than the heights seen during the pandemic boom—and below peers like Microsoft and Google Cloud. And with margins under pressure due to the massive upfront investment, the cloud juggernaut isn’t yet showing the extra-financial leverage expected.

b. A flat share price, a concerned market

While Amazon’s stock is hovering low-single-digit gains for 2025, AI-first companies like Nvidia and Meta have seen double-digit runs. Investors are asking: where’s the earnings lift to justify such a hefty investment?

c. According to the analysts

Mixed signals emerged in Q4—AWS growth “matched Q3 levels,” said one analyst—but the cloud remains capacity-constrained. CEO Andy Jassy’s warning of “lumpy” cloud revenue did little to soothe concerns. Bank of America and UBS, however, maintain “Buy” ratings, noting potential margin rebound once capex peaks.

3. The Payoff Channels That Could Flip the Script

Deploying Claude via AWS

Amazon has committed up to $8 billion in funding to Anthropic, and Claude AI models are now exclusive to AWS Bedrock—running on Trainium2 chips for optimized performance. This vertical integration positions AWS as the hidden muscle powering AI without needing its own flagship model.

Retail robotics = multi‑billion margin uplift

Analysts estimate the robotics boom could save Amazon $7–10 billion annually by 2032. Already, next-gen Shreveport fulfillment centers—ten times more robotic density—have cut costs by ~25%.

4. Inference Is the New Oil—And Amazon Wants the Pipeline

One of the least-discussed but most important shifts in AI infrastructure is from training to inference. The latter—running models in real time—is becoming the dominant cost and revenue center. Amazon knows this.

Cheaper, faster, repeatable inference

While Nvidia dominates AI training with GPUs, Amazon is positioning Trainium2 (and soon Trainium3) to lead inference—where models are deployed across thousands of apps and services. The economics matter: inference accounts for 80–90% of long-term AI compute demand.

Amazon’s bet is that in-house silicon, tuned for generative workloads and optimized within AWS, will undercut Nvidia’s general-purpose chips. The aim? Become the “wholesale cost leader” of AI infrastructure.

Claude + Bedrock = vertical loop

Unlike OpenAI’s tight integration with Microsoft, Amazon’s Anthropic partnership is more modular—but no less strategic. Developers using Claude via AWS Bedrock are paying for compute, storage, and model access—all through Amazon. With each new app built on Claude, AWS gains sticky revenue without owning or branding the model itself.

This approach mirrors Amazon’s retail strategy: low-margin, high-volume infrastructure wins in the long run.

5. Amazon’s Hidden AI Edge: Retail and Corporate Automation

While Wall Street obsesses over chatbots and LLM benchmarks, Amazon is deploying AI in places most investors don’t look—warehouses, delivery networks, and even corporate back offices.

Warehouse AI = Cost saver, not cost center

At the Shreveport facility in Louisiana, Amazon has deployed 1,000+ robots—up from 400 at older sites—and increased robotic density tenfold. These bots not only move goods but predict inventory patterns and optimize layout in real time—classic examples of narrow AI quietly transforming margins.

AI agents are already trimming corporate fat

CEO Andy Jassy said in a recent all-hands: “We’re using AI agents to automate hundreds of internal tasks… this will reduce our corporate workforce over time.” This goes beyond productivity tools—Amazon is using generative agents to rewrite code, handle legal workflows, and even assist in procurement.

In other words, generative AI is both a product Amazon sells and a tool it uses to cut costs internally. That duality could mean a double boost to margins over time.

6. Why the Payoff Still Isn’t Guaranteed

Despite the long-term potential, Amazon’s bet is not risk-free.

Labor and ethical backlash

If AI-driven layoffs scale—especially in warehousing or low-wage corporate roles—Amazon could face fresh regulatory scrutiny or employee unrest. The company already has a tense relationship with labor unions and state lawmakers.

Competition is catching up

While AWS remains dominant, Microsoft Azure is gaining share, especially among enterprise clients integrating OpenAI’s models. Google Cloud is also pushing aggressively with Gemini models and TPU-based stacks. And newer challengers like Together.ai and Mistral are offering lightweight, low-cost alternatives for startups.

Visibility still lacking

Amazon’s developer tools—like Bedrock—aren’t yet as visible or intuitive as Azure’s Copilot ecosystem. Unless Amazon improves AI marketing and UX, it risks being the infrastructure that others win on top of.

Conclusion: A Long Bet on Infrastructure Power

Amazon’s $104 billion AI investment might not be flashy, but it’s foundational. While other companies chase attention with chatbots and viral demos, Amazon is laying the groundwork to own the infrastructure layer of the AI economy. From Claude deployments to Trainium-powered inference to robotic warehouses, its strategy is clear: build quietly, scale massively, and monetize everything underneath.

This isn’t a story about quarterly wins—it’s about shaping the operating system of AI-driven business. If inference becomes the new oil, Amazon wants to be the pipeline. And when that demand fully kicks in—likely in 2025 and beyond—Wall Street might suddenly see this “boring” spend as the most lucrative move of all.